Published by

It’s been a couple of months since Apple unveiled their new “Vision Pro” headset and its “visionOS” operating system. Now that the initial hype has died down and we’ve had a little while to digest and dive into some of the related WWDC 2023 session videos, what can we expect from this new product? Will this finally propel Alternate Reality (AR) experiences into the mainstream market and usher in a new area of “spatial computing”? Let’s review the device’s capabilities and what we might be able to expect after the device launches next year.

What is Apple Vision Pro?

Just in case you need a quick refresher, the Vision Pro is Apple’s first headset computing device. If you paid close attention during the WWDC 2023 keynote, you might notice that Apple never once mentioned the terms “Augmented Reality” (AR), “Virtual Reality” (VR), or “Mixed Reality” (MR). In addition to Apple’s obsessive need to brand any and everything, they likely avoided these terms to steer clear of any negative pre-existing associations people might have with those words based on their prior experiences with VR-related experiences. Instead, this is a new “spatial computing” device that promises to change the way we interact with computers…more on that in a bit.

Although primarily thought of as a display, the Vision Pro is actually a fully independent computing device that runs a new operating system — visionOS. This joins Apple’s existing OS lineup of iOS, iPadOS, tvOS, watchOS, and macOS. While developers can technically start building apps in a simulator and even apply to be loaned a Vision Pro development kit from Apple, the product won’t be released to the public until early 2024 at a $3499 starting price. Of course, this is Apple we’re talking about, and you’ll be lucky if you only pay that much. Realistically, I would expect most people will be shelling out well over $4000 after adding things like Apple Care, lens inserts for glasses users, and other accessories. This is by no means a cheap piece of computing equipment, but the hardware and software capabilities that Apple is promising are still very exciting nonetheless.

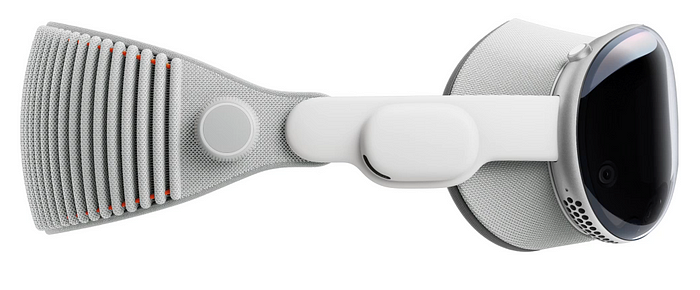

Hardware

Hardware-wise, this headset has cutting-edge VR specs, which easily make it the most powerful and capable VR headset to date. Real world and virtual content is combined and projected onto each of your eyeballs by a pair of postage stamp-sized screens that each run at a resolution higher than your 4K TV. It’s using the new M2 processor — the same one that’s in the latest iPads and MacBooks. Also included is a new “R1” processor, which is dedicated exclusively to processing real-time sensor data. Together, these two chips should provide an experience with extremely minimal latency, which is critical to avoid people becoming sick while using the device.

Sensor-wise, this thing is also loaded. In addition to all of the standard smartphone sensors you would expect, such as a microphone, accelerometer, gyroscope, and LiDAR, there are also something like 14 cameras built-in to the device. You’ve got main cameras, downward cameras, side cameras, and even inward-facing True Depth IR cameras for eye tracking. At this point, I feel like Apple might as well have added a couple of cameras on the back to give you some sort of cool (but surely nauseating) 360° vision.

There is also an external display, which is interesting since it’s not something we’ve seen on VR headsets and actually aims to perform a couple of useful functions. First, it allows others to see your eyes when you’re interacting with them while wearing the headset. Since the device isn’t actually see-through, Apple is using the internal cameras to project a virtual representation of your actual eyes onto the external screen. The internal eye tracking also enables foveated rendering, which ensures that the center area of your vision is rendered in the highest detail, similar to how our eyes work in real life. Second, the external display also allows others to see when you’re “fully immersed” in a VR experience. In other words, when you’re busy watching a movie on your own personal virtual 100-foot-wide movie screen or are doing some other immersive task like gaming, people can see that you likely don’t want to be bothered.

In addition to enabling highly detailed and immersive VR and AR experiences, all of the processing power and plethora of sensors enable you to interact with the device without any external hardware. No need for clunky VR controllers or base stations setup on the walls behind you. If desired, you can still connect external input devices like game controllers and keyboards, but it’s not a requirement to use the device.

Software

On the software side of things, visionOS, the new operating system tailored for the Vision Pro, aims to integrate seamlessly into Apple’s current application and developer ecosystem. Out-of-the-box support for existing iPhone and iPad apps is available, allowing them to be run in a virtual window in front of you. No doubt, the recent work Apple did to allow iPhone and iPad apps to run on macOS via technologies like Mac Catalyst and Apple Silicon is being reused for this use case. Current Apple app developers also get a head start since creating visionOS apps builds upon familiar foundational technologies such as SwiftUI, ARKit, RealityKit, and several other frameworks that have been available for years. Beyond the specifics of how to integrate the AR/VR aspects of a visionOS app, there shouldn’t be many new frameworks or development techniques that developers need to learn.

With the Vision Pro having 14 cameras and tons of sensors looking around your space constantly while you’re using it, it’s nice to hear that Apple has taken privacy seriously with regard to what data apps can access. For instance, apps need to request access to raw sensor data via a standard system permission prompt, which users are already familiar with for other privacy-sensitive features like location and ad tracking. Only once permission is granted will apps be able to unlock advanced capabilities like scene understanding or high-resolution skeletal hand tracking.

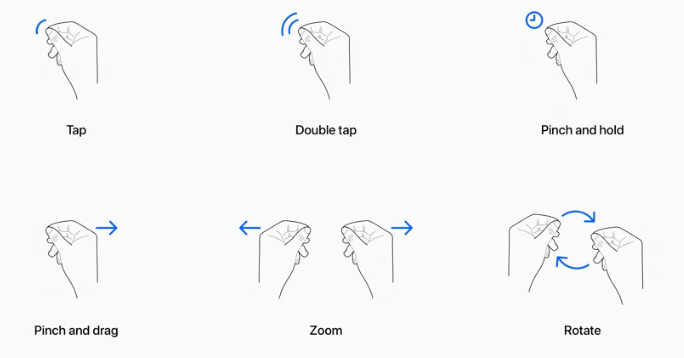

One other welcome privacy feature provided by the OS is the disability of apps to track precisely where you’re looking. Although there is eye tracking inside the Vision Pro headset, the data about where a user is looking isn’t made directly available to apps. Instead, apps only receive touch events whenever you virtually “click” on something using the new pinch gesture. This is a very welcome feature since I’m sure advertisers would love to know exactly how long you looked at an ad or piece of content so that they can harvest even more of your information.

A brand new authentication mechanism is new to the Vision Pro as well. For a while now, we’ve had Touch ID and Face ID biometric capabilities integrated into several of Apple’s products, allowing users to quickly unlock their device via fingerprint or face, respectively. The Vision Pro will be outfitted with a new biometric solution called Optic ID. Almost as if straight out of a science fiction movie, the capability to authenticate via you eyes is finally a reality.

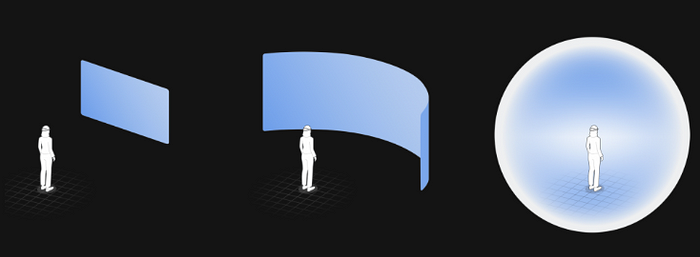

Lastly, one interesting aspect of the software and apps is the user-controllable immersion level. A digital crown, similar to the one on Apple Watch, allows users to control how “immersed” they are in the virtual space. Turn the dial all the way down to use the device in a “passthrough” mode where you can interact with 3D elements and apps in front of you, while staying aware of your physical surroundings. Alternatively, turn the dial all the way up to “enter the matrix” and be fully immersed in a virtual world.

visionOS

The name “visionOS” apparently didn’t come easily to Apple. Pre-WWDC rumors and even snippets in several released session videos and documentation actually refer to the operating system as “xrOS”. No doubt, Apple was uneasy about the name “xrOS” and it’s close association with the existing XR/VR/AR/MR technologies, opting to change the name to “visionOS” at the last minute.

Version-wise, visionOS will be starting at a true 1.0 release. It’s interesting, given that iPadOS and tvOS split off from iOS several years ago, but retained the existing OS version number. All 3 of these operating systems will be on version 17 in the fall. However, with visionOS, Apple chose to give it a fresh start at version 1.0. It will be interesting to see how much of the underlying technologies and interactings visionOS pulls from iOS and macOS vs what gets entirely reimagined or rebuilt from the ground up.

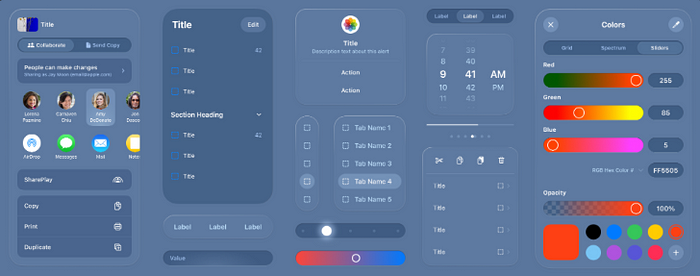

While visionOS is a new Apple platform and OS, including a new App Store, it will still have a familiar look and feel to Apple’s other operating systems. We can already see that UI elements and interactions take heavy inspiration from iOS, iPadOS, and tvOS. The Focus Engine from tvOS provides a natural mechanism enabling you to automatically highlight/select elements as you look at them. All of this serves to enable what Apple calls “spatial computing”.

Spatial Computing

“Spatial computing” is how Apple is describing the experience of using a Vision Pro. Touted as a totally new computing paradigm, Apple is heavily influencing the way users will learn how to interact with the system, much like they did with the introduction of multi-touch devices back in the day and features such as pinch-to-zoom, flick-to-scroll, and the virtual keyboard. I expect that these new gestures and interactions will likely start to become standard across the industry.

Instead of a keyboard and mouse or a thumb/finger, the primary way you interact with the system will be using your eyes, fingers, and sometimes your voice. Unfortunately, the device can’t read your thoughts yet, so you still need a keyboard (virtual or physical) for typing unless you want to use the text-to-speech capabilities that are now built-in to Apple’s operating systems. However, with the speed and precision that seems to be enabled by being able to look and pinch to interact with elements, this may be as close as we come to being able to accomplish tasks simply by thought. Only brain-computer interfaces like Neuralink can offer significant improvements from here.

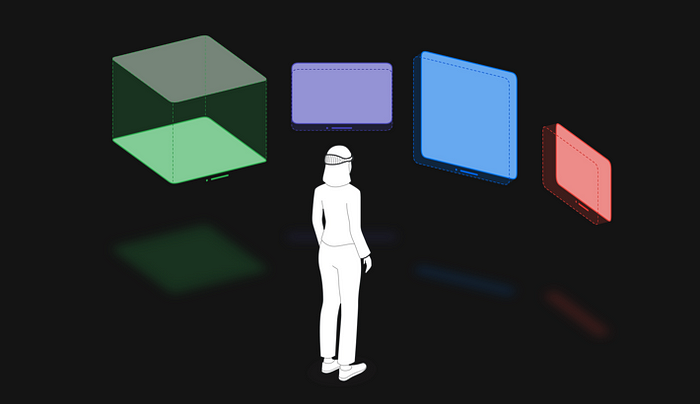

In addition to new gestures, the other primary aspect of spatial computing will by the variety of app experiences enabled by the different levels of immersion. Apple splits the app experiences into 2 categories — those that occupy the “shared space”, and those that occupy the “full space”. In the shared space, users interact with windows and volumes. Windows are 2-dimensional, much like what you’re used to with a conventional desktop experience. Volumes are 3-dimensional scenes that live alongside your windows in the shared space. The full space is when you’re fully immersed in an app, and its content takes over everything you see. As far as adopting both levels of immersion, we will have to wait and see if application developers are compelled to introduce both types of experiences into their apps.

Downsides?

With as much advanced technology and new experiences this product promises to bring, could there really be any drawbacks that we need to watch out for? I think the first drawback that’s obvious to many people is the cost. At a starting price of $3500, it’s definitely prohibitive to mainstream adoption. We’ll likely see a slow ramp in popularity for at least a couple of hardware versions as capabilities improve and the price drops. Maybe in a few years, we’ll see an “Apple Vision” (non-pro) headset introduced for a cheaper price, which would definitely help make the device available to more people.

You also have to remember that this is technically a first-generation Apple product. Historically, first-gen Apple products do tend to be a bit underpowered. For instance, think back to the first iPhone, iPad, and Apple Watch models. Compared to just 1 or 2 hardware versions later, those original devices were frighteningly slow. With the Vision Pro, the processing power does look to be adequate, but that seems to come at the expense of battery life. Apple is advertising only a 2 hour runtime, which means that you’ll probably want to stay tethered to a charger most of the time when using it. This will be the main early adopter tax that you’ll pay, but the capabilities certainly do still make it a compelling product.

Although we don’t know for sure, one other likely drawback to the device will be its lack of support for other VR platforms. Personally, I don’t own a VR headset right now, so it would be great if I could purchase a Vision Pro and use it on other VR platforms like SteamVR for PC gaming or Facebook’s Metaverse. I wouldn’t bet on these to be compatible options anytime soon, but we can still dream.

The social aspect is also something to consider here. For better or worse, everyone’s eyes are already glued to their phones much of the time these days; now we’re aiming to glue everyone’s phones to their eyes. We may also experience some uncanny valley effects. Will the virtual eyes that get displayed on the external screen be weird or okay? How about the virtual persona that gets used in place of your actual video feed when taking a video call while wearing the headset? Will the face-scanning capabilities provided by the device generate realistic looking avatars or will they be creepy? Popular video conferencing solutions like Teams and Zoom have already started introducing virtual personas, so maybe it will become the norm and all work out in the end.

What’s Next?

If you’re an app developer, you can actually already start developing visionOS apps. The visionOS simulator is already included in the latest beta versions of Xcode 15 and you can even already submit visionOS apps to Apple for approval. It’s important to remember that this is a new platform with a new App Store, which doesn’t happen very often. Now is your chance to get in on the ground floor, so get your fart apps and flashlight apps ready!

The other big question on everyone’s minds is whether or not this will be the tipping point for VR. Will this finally be the thing that starts to get everyday consumers excited for VR types of experiences? It will be interesting to see if we see widespread use in public like we do for iPhone/iPads, AirPods, and Apple Watches, or if VR products continue to remain “at home” devices. Also, how far will people push the boundaries when using the Vision Pro? Will we see people running or working out with it, or even questionable activities like driving with it? 😬

Finally, will the Vision Pro actually change the way we compute day-to-day? Will I eventually reach for the headset vs my MacBook when its time to get work done? I expect that it will likely depend on my intended use case much of the time, but the iPad was always poised to be a laptop replacement, but has never quite lived up to that promise for me.

Regardless of the unknowns, the Apple Vision Pro and visionOS represent a significant step forward in augmented and virtual reality technologies. Whether you like it or not, the age of spatial computing is upon us, and with it, we are one step closer to living in Cyberspace.