Published by

It’s May, and you know what that means! Ok, maybe you don’t – it’s time for Google I/O! Every year, thousands of developers flood Mountain View, California to learn about the latest innovations and announcements from the software giant. The event kicked off today with its inaugural keynote presentation, and while many of us watched from our office in Dallas, a few lucky Rocketeers were onsite to experience the action firsthand. Over the course of the next several days, we will share insider details, perspectives, and helpful recaps of what we uncover…starting with today’s keynote.

Make Good Things Together

Setting the theme in the first minute of the presentation, Google kicked off its annual developer conference with the phrase “make good things together.” This was present in nearly every segment of the presentation, whether it was about Google facing challenges to better the world or giving everyone the tools to do it themselves.

Here are four key things that caught our attention and that we believe will have the greatest impact on business in the next year: Google Assistant, App Actions, App Slices, and ML Kit (click any topic to jump to it in the blog). There were, of course, more than those four topics that piqued our interest, but those are the ones that will impact businesses the most in the coming year. Keep in mind that several of these are sneak peeks and some will have many more features and capabilities in the coming months – so, be sure to check back in for more information as it is released.

1. Google Assistant

Making its debut two years ago, Google Assistant is much more than it was when it started. At I/O today, three new features were announced for Assistant that could truly give it the edge over Alexa and Siri (and Cortana I guess). Those features are Continued Conversation, Multiple Actions, and improved interactions with Google Assistant Apps (the really big one).

Continued Conversation allows for Assistant to continue providing answers without having to prompt each question with an “Okay Google”. Once a user has completed the conversation at hand, a simple “thank you” will end the interaction and kill the mic. This move allows Assistant to understand what’s conversational and what is a request as well.

Multiple Actions sounds simple but is extremely complex. Simply put, this allows the user to say things such as “what time is it and how long will it take me to get to work?” and get answers to both questions without having to ask them individually to Assistant.

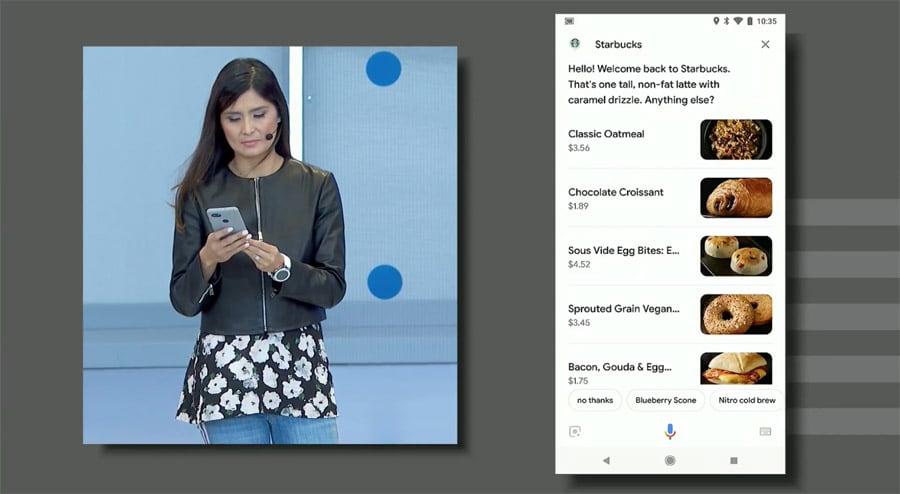

Google Assistant Apps have some new capabilities as well. To get ready for Smart Displays, Google gave Assistant a visual overhaul. Now, information is displayed full screen and can include additional visuals such as videos and images. eCommerce applications can benefit greatly from the visual overhaul as the transition into Assistant Apps is much easier and more natural for the user. Previously, a user had to request to be connected to a Google Assistant App, but now a simple request such as “order my usual from Starbucks” will take the user directly into the Starbucks Assistant App. Seen above, the user can quickly and easily select additional menu items to include in their order via the new visual interface mentioned before. From first request to completed order, this interaction will likely involve fewer steps for the user than going directly into the Starbucks app (given it’s not on the user’s home screen).

2. App Actions

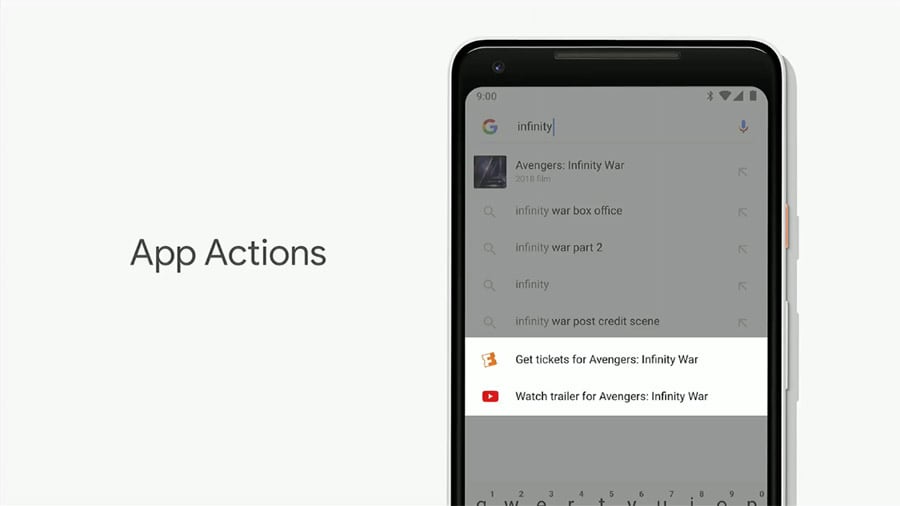

Suggestions already appear below the Google search bar while typing. Soon, suggested actions will begin to appear as well. This might not sound like much, but imagine someone is searching for a particular product, like laundry detergent, the Walmart app could prompt an App Action to “add to my grocery order” for pickup later.

As shown above, Google provided an example of searching for Infinity War. When the user searched for it, they were prompted with options to buy tickets or watch the trailer. This is a great example of a contextual interface, but this doesn’t just happen like magic. Apps needs to be optimized to allow for this type of interaction.

In this example, Google has placed App Actions in the launch menu. The suggestions are based off of your everyday behavior. In this instance, it is suggesting the presenter call Fiona, as he usually does at this time of day, or continue listening to the song he last listened to since his headphones are connected.

3. App Slices

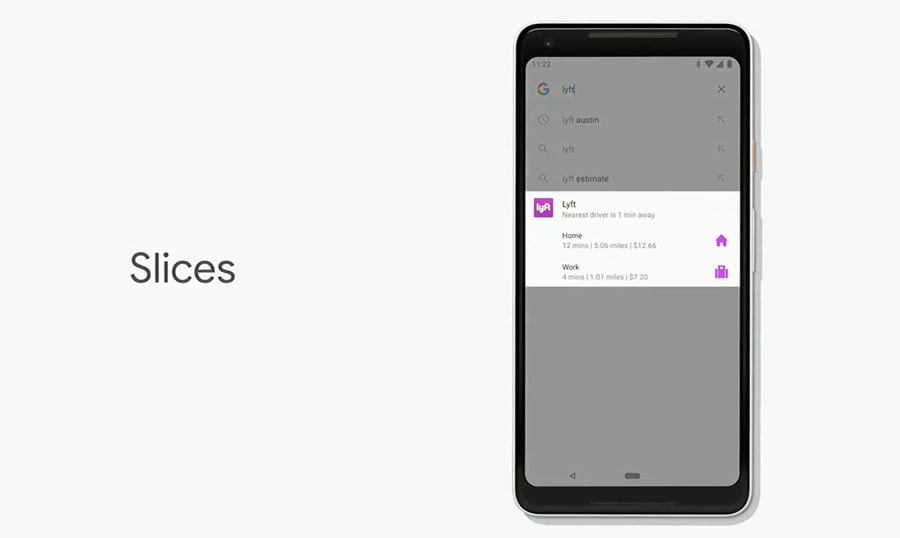

Similar to App Actions, App Slices also appear in search. But there is a difference. Instead of simply suggesting an action, App Slices use the functionality of an app to display information in search. It can present a clip of a video, allow the user to check in to a hotel, or even show photos from the user’s last vacation.

In the example shown here, simply searching “Lyft” brings up the suggested routes in the Lyft app and displays the cost of the trip as well. We’ll learn more about what App Slices are available soon, so be sure to check back to learn more about the potential benefits of this innovation.

4. ML Kit

Part of Firebase, ML Kit (Machine Learning Kit) now offers a range of machine learning APIs for businesses to leverage. Instead of having to build custom ML algorithms for anything and everything, optimize for mobile usage, and then train with hundreds, or preferably thousands of samples, Google now provides “templates” for some common business needs.

Leveraging TensorFlowLite and available on both Android and iOS, ML Kit will make it easier to integrate image labeling, text recognition, face detection, barcode scanning and more. It can even be used to build custom chatbots.

But That’s Not All

There were plenty of other announcements in the keynote and even more on their way as the week goes on. For instance, right after the keynote, we found out that Starbucks had nearly as many orders come through its PWA than via its mobile app. We learned that Google Assistant can now make phone calls to schedule appointments – without the customer service representative realizing it’s a computer. Google announced a new Shush mode to completely eliminate notifications when a phone is upside down on a table, and a lot more.

Even among the four topics covered in this recap, there is more information to come as the week goes on. We’ll dive into each as we get more information back from our Rocketeers in California, so be sure to check back in a couple of days.

Share:

Categories

tags

Related Posts

The Great Big Budget Cut: Prioritization